One of the reasons which make Magento popular and favorite among online retailers is the availability of the abundance of options for customizations and integration. In the present world of online shopping, Magento stores carry the capability to do wonders. To stand at the top among competitors, Magento’s Point-of-sale (POS) integration is exactly what is required. Magento’s POS integration is easy to use and quite powerful to work enhancing the overall customer journey.

Why is there a need for POS integration?

In the present highly competitive time, where online store owners leave no stone unturned to streamline the shopping experience of their customers, it is important to have POS integration in your online store. Magento’s POS integration is transformed using PWA technology that has created an innovative and unique touch-point customer screen to communicate with customers and is wireless. Magento POS has been successful in enhancing businesses in different fields like fashion, health and beauty retail, accessories, sports and toys, hardware and gifts, e-cigarette, food and drink retail and much more.

It also enhances the automated data exchange between the systems and streamlines business processes. Another interesting feature is that it helps in visualizing the checkout procedure with product thumbnail images and front-end look-alike screens. POS integration comes with a highly responsive design which makes it easily compatible with tablets even. With all these powerful features and high competition, it is important for online retailers to integrate it into their stores.

Benefits of POS Integration

After talking about the need to have POS integration in the online store, it is important to know the benefits that POS integration provides to its users. Let’s begin with the benefits:

• Increased sales with diverse payments

Magento POS accepts all kinds of payments like credit cards, debit cards, cash, stripe, Zippay, Tyro, Authorize.net, and much more. With the flexible web POS; the customers get the freedom to make the payments using whichever way they like. Customers can mix multiple payment methods per order, pay partially now and the rest later even. There is no mess at all while making payments.

• Speeds up the checkout process

By getting Magento POS, the checkout process becomes easier and quicker as it can be easily integrated with printer, cash drawer, barcode scanner, credit card reader. This POS integration helps its users to quickly create orders and visualized the checkout procedure by showing product thumbnail images.

• Fast transactions

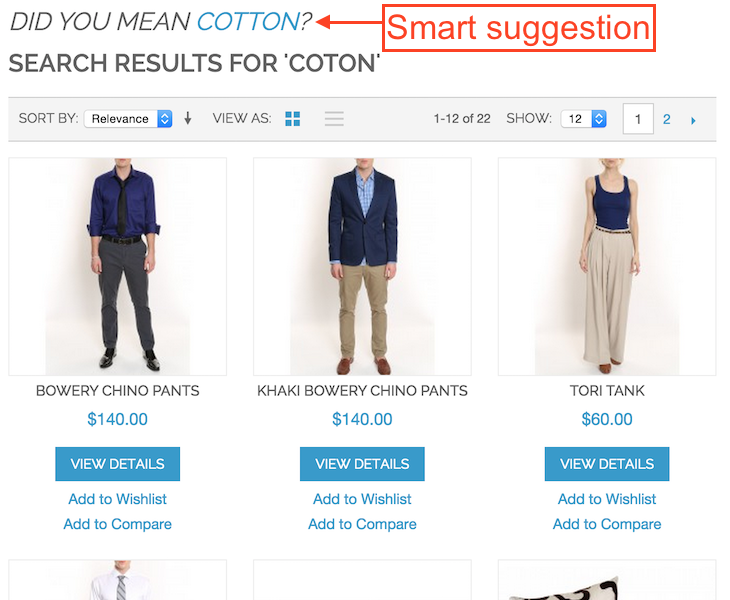

Magento’s web POS integration makes the product searching and filtering easy and quick using attributes like name, SKU, price, color etc. It also allows adding a custom sale in a single click with comprehensive information.

• Easy flow of Magento orders to POS

Magento POS is developed using the latest technology and is highly responsive which makes all the orders to automatically flow down to the POS system and show up as a complete sale.

• Saves time and effort

Magento POS saves time and efforts of the resources by eliminating the need to enter the data manually. This also reduces the chances of human errors, thus saving a lot of time.

• Increased revenue

As Magento POS integration takes just 10 seconds in the checkout process, making the store run smoothly during peak hours even. One even gets customer support 24*7 throughout the week to ensure smooth functioning of the online store. This smooth functioning of the store results in improved and increased overall revenue of the store.

• Increased profits

Magento POS uses IndexedDB browser which keeps the data safe. This enables the online retail store owners to make money at trade shows and offline events regardless of the network status. This results in overall increased profits.

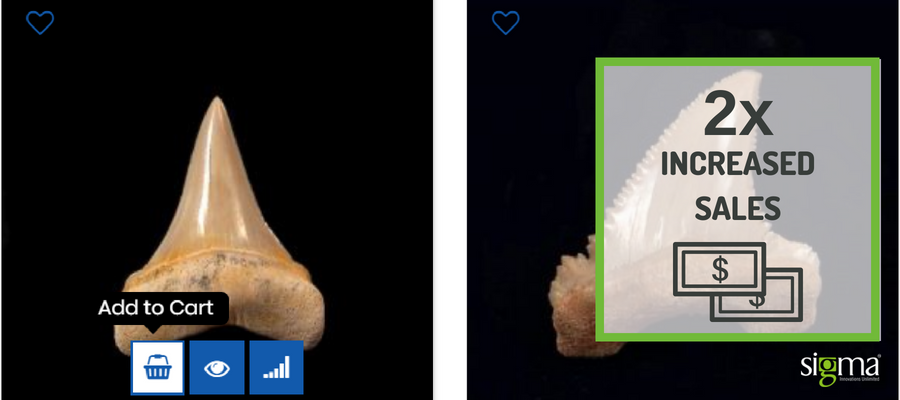

The present time is the high time to sync physical stores in real time with Magento to engage audience sitting online as well. After knowing all the benefits of Magento POS, one must integrate the same within their online stores starting today to gain 2x more sales and growth.

Buried Treasure Fossil is the place for new and seasoned fossil collectors, providing a wide range of incredible fossils to choose from. As a purely online business, BTF strategy is to offer great fossils at great prices, and super service for customers across the USA. The relatively young company is owned by Gary Greaser, an avid enthusiast, and collector of the fossils. Having realized that migrating to

Buried Treasure Fossil is the place for new and seasoned fossil collectors, providing a wide range of incredible fossils to choose from. As a purely online business, BTF strategy is to offer great fossils at great prices, and super service for customers across the USA. The relatively young company is owned by Gary Greaser, an avid enthusiast, and collector of the fossils. Having realized that migrating to

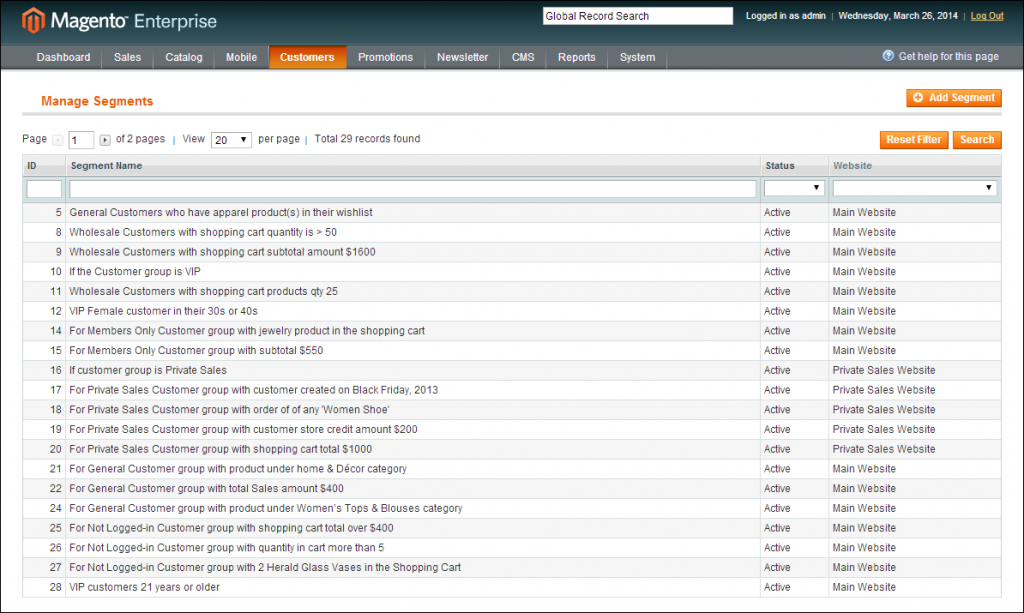

2. Advanced Targeting and Segmentation tools

2. Advanced Targeting and Segmentation tools

5. B2B Support –

5. B2B Support –

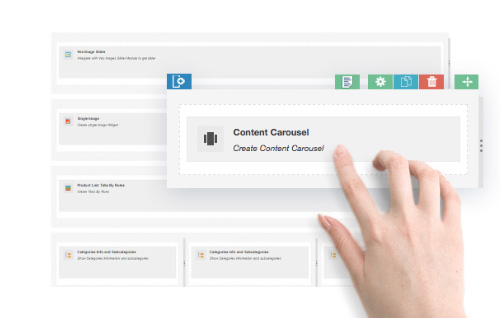

Modals that are controlled typically with a script and overlaid as a cover over the page’s elements has been an effective user interface medium for quite some time. The only change that has modified the trend is that these models are now full-screen so that both the mobile and computer

Modals that are controlled typically with a script and overlaid as a cover over the page’s elements has been an effective user interface medium for quite some time. The only change that has modified the trend is that these models are now full-screen so that both the mobile and computer  These two features are forming the face of most e-commerce

These two features are forming the face of most e-commerce